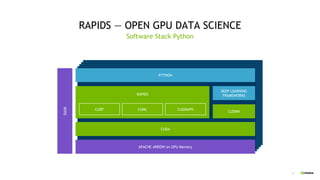

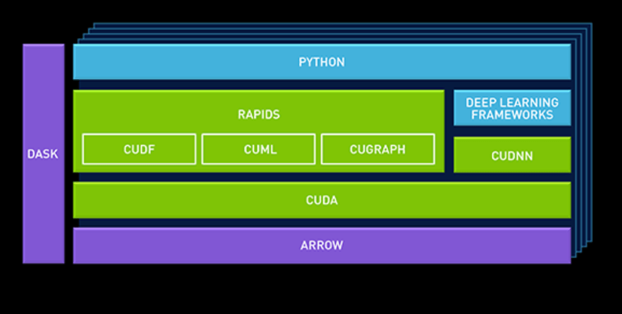

GPU-Powered Data Science (NOT Deep Learning) with RAPIDS | by Tirthajyoti Sarkar | DataSeries | Medium

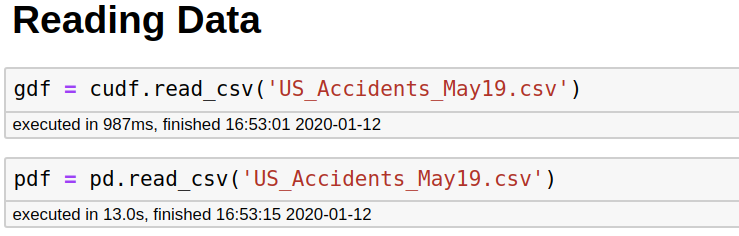

Pandas DataFrame Tutorial - Beginner's Guide to GPU Accelerated DataFrames in Python | NVIDIA Technical Blog

Machine Learning in Python: Main developments and technology trends in data science, machine learning, and artificial intelligence – arXiv Vanity

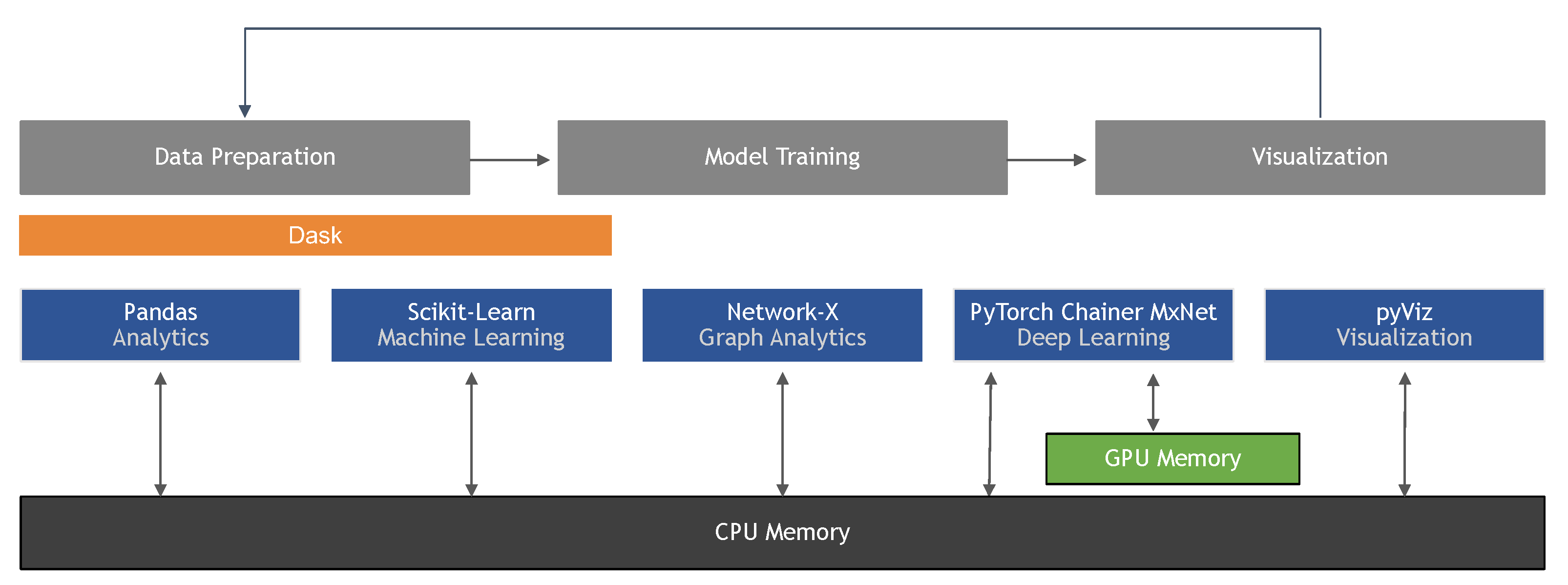

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

KDnuggets on Twitter: "Bye Bye #Pandas - here are a few good alternatives to processing larger and faster data in #Python #DataScience https://t.co/8Aik1uDfKJ https://t.co/jKzs4ChrYk" / Twitter